Everything you wanted to know about AI... but were afraid to ask

As a new exhibition examining artificial intelligence opens at London’s Barbican, Nexus Studios’ creative director, Alex Jenkins, tells shots about a ‘warmer’ vision of AI and how we can be part of its future.

How do you distil the entirety of the already-overwhelmingly-complex-and-changing-as-we-speak phenomenon of Artificial Intelligence into an accessible and entertaining interactive exhibit? AI: More than Human, a groundbreaking new exhibition at the Barbican Centre, attempts to do just that.

Taking visitors through historical representations of artificial intelligence like Jewish golems and Japanese kami - and littered with pop cultural allusions from Tron to Transformers, the exhibit, which opens today [Thursday May 16], offers a sweeping look at AI’s past, present and potential.

At the heart of the exhibition is Data Worlds, an examination of AI’s capabilities and place in our world. Produced by Nexus Studios, aset of seven interactive works demonstrate the omnipresence and unlimited potential of Artificial Intelligence, from face recognition to data collection to a fascinating simulated conversation with an AI.

We caught up with Nexus Studios’ creative director, Alex Jenkins, to find out more.

Above: Nexus Studios' Alex Jenkins interacts with one of the Data Worlds exhibits.

How did the idea grow from conception to execution?

The place is called Data Worlds and its focus is on AI in our world today – how it’s being applied, how it’s being used in all our infrastructures and systems, from commercial to digital government and on a very personal level, too. So the concept grew in collaboration with the Barbican. When we were requested to do this, our role was to conceive that sort of data space via the idea of the role that AI is playing. The way the piece is run is, it aims to raise your awareness of how AI is very firmly integrated in the fabric of our world.

Because it is about AI, it’s about the future, it was an opportunity to think in more dynamic and surprising ways.

Why was it important for the exhibition to be interactive?

The Barbican had a desire for people to engage with this piece in a different way than perhaps a normal exhibition would. Because it is about AI, it’s about the future, it was an opportunity to think in more dynamic and surprising ways. It allows you to approach subjects with a slightly different perspective. By going up to Seeing Is Believing, the YOLO (You Only Look Once)-powered [exhibit], we’re just directly using that technology and putting you on the spot. If no one’s standing there, the plinth is formless, it’s black. You are the subject. It does that far more visually than just explaining that we’re being categorised.

You would have a conversation with an intelligence; you would have an exchange. So we’re using simple tricks - proximity, getting you close, using a camera, having a conversation by nodding your head and shaking your head, it uses things that you do with something that you expect to have an intelligence and not just, say, pressing the button on the left. They all work in our favour rather than just offering explanations. Standing under Meet the AI, for example, it greets you. It’s very simple but a key change in perception.

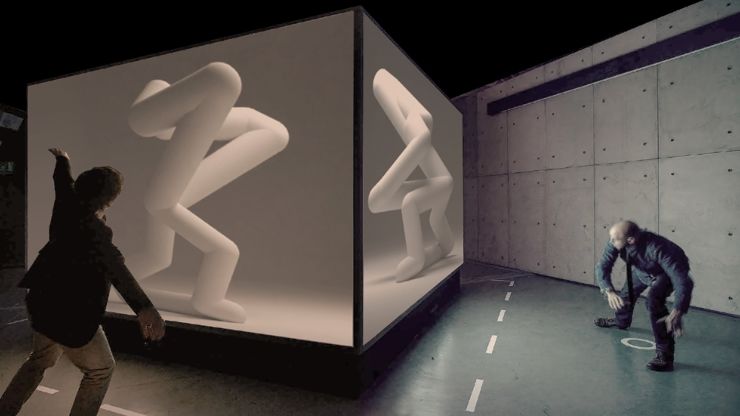

Above: Future You, Universal Everything; Co(AI)xistance, Justine Emard

What vision of AI did you want to present?

My hope is that people will realise that AI isn’t some enemy or uberfiend that’s going to take our jobs away or destroy the earth. Don’t get us wrong, there are very real conversations about the negative ramifications that are happening. But currently, people don’t really understand what it’s doing for us or what it means. So by exposing all the ways that AI is being used in things that we’ve come to take for granted or find very useful, we realise it does have an active and good role. I just wanted people to have a broad awareness of that it is here, we are using it, it’s not going to go away. I think we can, if we want to be, be part of its future.

How did you want the exhibition to interact with pre-standing media tropes?

Sci-fi is so influential in our vision and dreams of what future technology can be, and undoubtedly informs that. I couldn’t help but think about Stanley Kubrick’s 2001: A Space Odyssey when we were conceiving this piece, and the iconic nature of the monoliths. Until now, AI has been very hard and cold and geometric. Whereas, actually, AI for us humans is a bit warmer, potentially.

I was very conscious of the way computer research is portrayed, as well. It’s often very visceral and a little raw-looking. So actually there’s boxes that do go around that classify as that, the way they use only RGB - web-safe colours - is a really interesting language of its own. It’s a bit more maths-based. So we sought to bring some of that language into the space where we’re telling those stories. We obviously can’t use it on everything, but it’s something we carried with us. Hopefully that grounds it more in reality and research more than film tropes to find solutions to express the story.

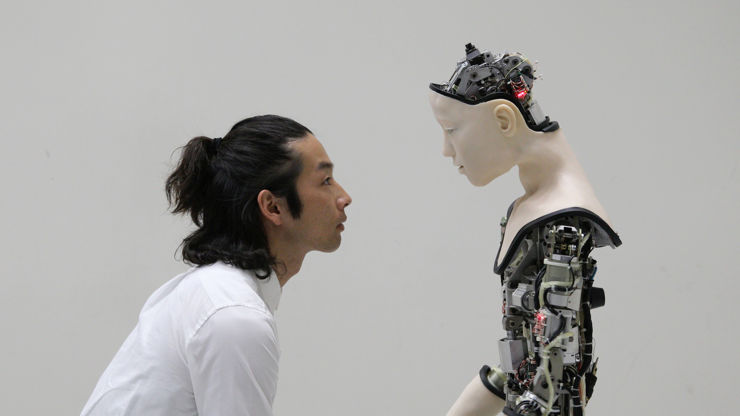

Above: What a Loving and Beautiful World, teamLab; Alter, Hiroshi Ishiguro

What else did you consider when trying to visualise the space?

There is an overall vision for the space, which the appointed architects were there to care for. You have to be responsible and work within the parameters you have, as well. But particularly for Data Worlds, this idea of what I was referring to in our piece quite early on as “nodes.” They’re kind of a network system. Each one of those talks a different piece of AI technology, but they work together to be more intelligent, to be more intuitive, and not things that work in isolation. And the reason we fronted all of them with this smooth, black surface is to make them as reductive and formless as possible. At a data level, they don’t have any shape left. They’re to be interpreted, they get their form when we find a way to apply them or the AI comes up with a way to think about it and be useful to us. We also asked to make kind of a definitive separation between that world and the rest of the space, as well. So there is a logical difference between the exhibition-centric opening to something that gets less and less material as it goes through.

It’s such a fantastic topic, but it’s really deep and complex and it’s so easy to get lost down a rabbit hole.

What was it like collaborating with so many scientists and researchers?

It’s quite mind-blowing and overwhelming to work in this space. It’s such a fantastic topic, but it’s really deep and complex and it’s so easy to get lost down a rabbit hole. Trying to crystallise that and select a small handful of pieces that we could bring to the public was really difficult. You feel like you’ve only just scratched the surface. I think also another one of the challenges is trying to put that in layman’s terms for all of us. I had to get my head around it, as well, as well as all of my team and look for ways that we could find a nugget to hold on to that would express it in a way that we could get some meaning and understanding from.

Above: What a Loving and Beautiful World, teamLab; Hype Cycle: Machine Learning, Universal Everything

What was the biggest challenge in bringing your part of the exhibit to life?

Making sure we could verify what we were talking about was very important, and that took some times. One of the key ones was taking such a complex and difficult subject and trying to distil that into something that would be narratively entertaining was very important to us at Nexus, because all our work is predicated on great craft and storytelling. Interactive arts is very much part of that. We don’t do technological builds for the sake of them. We have to tell that story, we have to make it human and emotional and connective. Pulling the science and finding a story that underpins that that can delight or surprise people was our challenge.

Can you talk us through the seven pieces that are part of Data Worlds?

Micro to Macro gives you a snapshot of all the different levels that AI is observing, helping, building patterns and making recommendations. From finding tumours and cancer cells to helping make better predictions in weather patterns and even finding exoplanets.

Seeing is Believing is a very simple installation called which is based on YOLO, which is a real-time object detection system. That’s a very simple conceit to just let you know that we’re being observed on all levels and that we’re being classified and categorised, so that the AI has an understanding of our world.

Data Faceprint shows how data is the key to enabling this whole intelligence. We are feeding AI. From there, we take a swing at taking a small look at interpretations, so where AI starts to provide a role for itself in the world.

Human or AI is based on the idea of automated journalism. It’s a very simple game that asks you to work out who wrote the article, is it an AI or is it a person, can you tell the difference and does it matter?

Above: Waterfall of Meaning, Google PAIR; Method

Our aim is to offer a counterpoint: neither too positive nor too negative, but to reflect the conversation going on right now.

Learning to See, a piece by Memo Akten, swings things round a bit. Akten’s trained the AI to see only certain things. So for instance, seascapes and waves, oil paintings or just flower arrangements. But when you train the camera on other things - in this case, collections of household items and dusters and wires - because the AI's only learned how to see the world in classical oil paintings, it interprets it that way. Which raises the question, how does AI know what’s right or wrong or if something should be categorised as X or Y? It’s in the hands of who’s teaching it.

Hello, is it Me You’re Looking For? seeks to explain the role of AI in dating and companionship, based on the immortal lines of Lionel Richie. In this simple animated poster, it explains how AI is unlocking the heart, and learning about us and offering us back something that we really, truly want.

After realising how AI is in every facet of our lives, we take you to Meet the AI, where you have a pretend conversation with the voice of the AI, who asks you rhetorical questions which allow you to reflect on how we feel about what we’ve just seen. Our aim is to offer a counterpoint: neither too positive nor too negative, but just to reflect the conversation that’s going on right now, and to understand that we are part of shaping that. We can choose to have a responsibility towards it, and hopefully move a bit further on from the black and white stance that people have been taking towards it.

)

+ membership

+ membership