How Preymaker used Unreal to form a fully-fledged short

With BLUE, creative and technology studio Preymaker set about creating a real-time animated short film entirely in the cloud, achieving startling levels of detail without the use of compositing. shots speaks to executive producer and founder/chief creative Angus Kneale and director Robert Petrie about how the tech might change the future of content creation.

How did the project come about?

Angus Kneale: We had worked pretty extensively with Unreal Engine in the past perfecting photo-real cars, we also knew that Unreal continued to advance since then and we were excited about all the creative possibilities of real-time.

When we started Preymaker we wanted to create a stand-out piece of work that was creatively and technically inspiring. We knew that Unreal Engine and the cloud were going to be at the heart of our future so we needed to create something that would redefine the way content would be made… and then the pandemic happened.

At the beginning of the pandemic we had the incredible luxury of time … so we were able to dedicate a lot of time to brainstorming and developing ideas, we had quite a few ideas on the table. BLUE was one of them and it just felt right, we also heard about the Epic MegaGrants program from Epic Games, so we pitched the idea … the stars aligned so to speak and we were off.

What first started off as a technical mission quickly became something creatively quite special that we all fell in love with

Credits

powered by

-

- Production Company Preymaker

- Director Rob Petrie

-

-

Unlock full credits and more with a Source + shots membership.

Credits

powered by

- Production Company Preymaker

- Director Rob Petrie

- Editing Consulate

- Music/Sound AMBR

- Audio Post Heard City

- Executive Producer Angus Kneale

- Executive Producer Melanie Wickham

- Executive Producer Verity Kneale

- Editor Chinwe Chong

- Executive Producer Michelle Curran

- Senior Producer Michael Perri

- Sound Designer Daniel Nolan

Credits

powered by

- Production Company Preymaker

- Director Rob Petrie

- Editing Consulate

- Music/Sound AMBR

- Audio Post Heard City

- Executive Producer Angus Kneale

- Executive Producer Melanie Wickham

- Executive Producer Verity Kneale

- Editor Chinwe Chong

- Executive Producer Michelle Curran

- Senior Producer Michael Perri

- Sound Designer Daniel Nolan

For the less tech-savvy amongst us, how does using Unreal Engine and not needing compositing differ from the norm in 3D animation?

Robert Petrie: Unreal really comes into its own on the back end of the production. You are using the engine to finish all the shots to completion within Unreal itself. A huge goal when starting BLUE was to have no compositing on any of the shots and cut out that whole task completely. The software gave us the ability to use fully in-camera techniques - from depth-of-field, fog, motion blur. This meant that there was no real need to export render passes to then be recompiled and composited within Nuke.

We really wanted to see if this way of making animation and telling a story was even possible or more to the point ready to be put into production for the short film. I started playing around with Unreal at home a couple of years ago and was blown away by how complex you could make a scene and environment. It was a little bit of a light bulb moment in all honesty. I really thought, why wouldn’t you not make animation content within this game environment? There was a phrase that I had constantly in my head and that was “shoot it all in-camera.” This was the holy grail to me, to be able to make final picture and pixels under one environment.

To get everything the way you wanted through the lens of the camera in a virtual environment has been a dream for many years.

It has never made any sense to me why I would be in 3D and make something look the way I wanted, then to have to re-composite and put it back together. Unreal gave that opportunity to literally have everything in-camera and just export the final sequences, which was incredible. To get everything the way you wanted through the lens of the camera in a virtual environment has been a dream for many years and this really made that a reality.

Can you explain the process of working in 'real-time'?

RP: One of the key benefits of working in real-time is that you can create assets that are ready to be used in multiple platforms and formats - whether for cinema, for video games, or something else - so you’re significantly streamlining the process and the end result. We kept discovering through this project that the process is very different from a traditional 3D and animation pipeline in many ways.

There are no real shortcuts or hacks so to speak in a real-time workflow - this is in contrast to traditional commercial 3D methods, which are full of tricks and hacks to get to a final image, which you then have to export and essentially recreate to the specs in different formats. I loved that we were building assets that could be used not just for linear filmmaking, but also for a mobile game, a VR experience, metaverse platform and more. That’s really cool and something that’s always been a goal - to make assets and content that has so many uses and are not just locked in one medium.

Preymaker can now develop BLUE in so many ways to build this story, which is beyond exciting.

How did you approach the project? As a narrative story? A tech demo? A bit of both?!

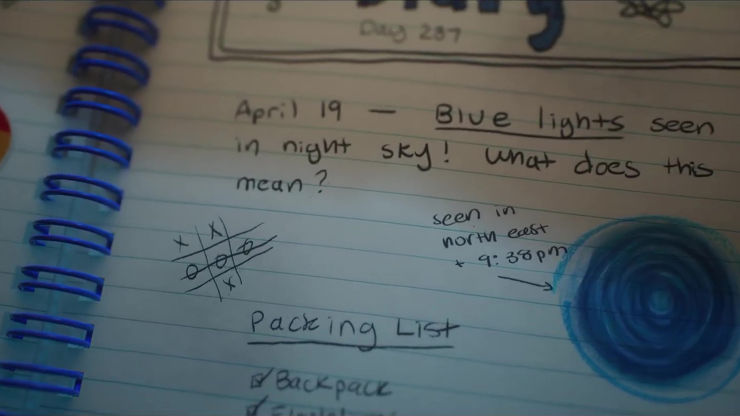

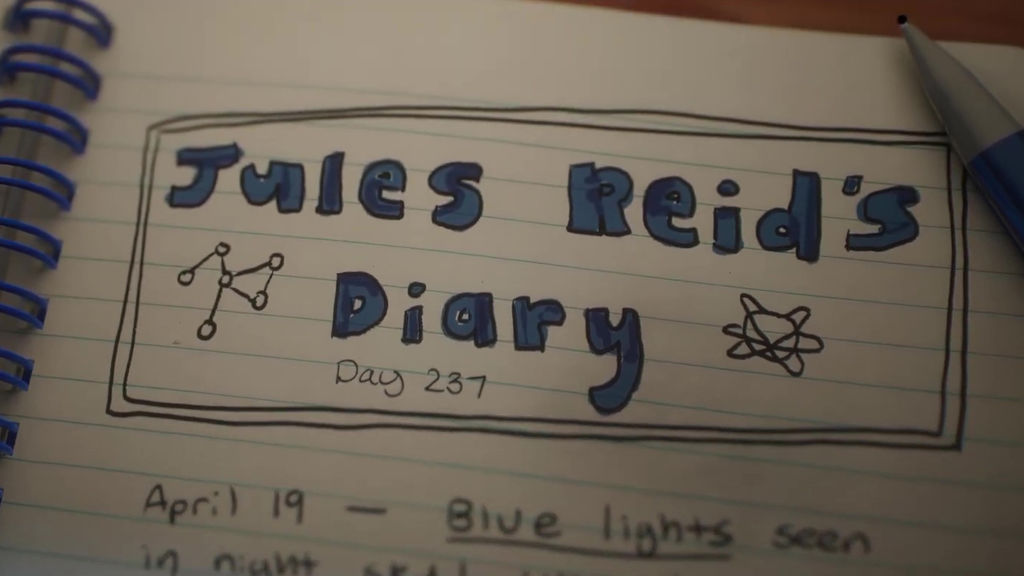

RP: Story and characters always take precedence over technology in my eyes, but heading into production we had to build scenes, animation rigs and models in a specific way that could be used within the Unreal game engine - so the idea from the start was that this story could live across multiple platforms and formats simultaneously. It was very similar to how you approach making any content, in that you create a production approach as you are developing the story, designing characters, creating storyboards and get that all worked out before you head into the technical aspects of the process and production.

We never really saw it as a tech demo; we just wanted to make something that we hoped people would be into when they watched it. But that being said, being able to demonstrate that creating animated content within this environment was possible and gives us the pictures that we had worked hard to achieve was incredibly satisfying. This is just a tiny step into the ocean of what we believe is possible and can be achieved so seeing what we made with such a small team of people is still incredible.

We never really saw it as a tech demo.

There is always this feeling in the back of your mind when you start down a road of something that you have never done before, that you hope your convictions and belief it will work are enough, but there are always crossed fingers and a lot of hope. It was by no means an easy process to get to the finish line - first steps never are - but what we learned from making this has only strengthened the belief that building worlds and telling stories in this interactive environment works which really makes the possibilities so exciting of what we can make moving forward.

How did the project change as it went along?

RP: Flexibility, learning and adaptability were the key things for us as we went along. There were a few technical challenges we had to overcome, which were mainly in the animation rig of the character Jules and having her move naturally and the way we wanted her to. We wanted to be able to give the animators as many controls as possible to get to a fidelity of animation we were all striving to hit.

Rigging for Unreal is a different process from how we would rig this in a more film-orientated pipeline, so that took a few weeks to iron out. We were all new to this entire process in terms of production, so the learning curves had to be incredibly short and we had to pivot so quickly if we found something was not working in an efficient way.

That was the perk of being such a small team of people. It allows you to be nimble and adapt so quickly.

What were the major learnings of yourself and your team from working in this way? What are the pros and cons?

RP: I’ve never worked on anything where I have learned so much in regards to every single step of the Unreal Engine production process. When we all started on BLUE, our Unreal experience was very minimal and it really felt like the scene in Indiana Jones when he raises his foot and steps out onto the ledge. That was all of us but I was surrounded by such talented people that even on days when things were breaking you would always have the belief that we could work out any tech issues that come our way.

One of the big learnings from it all is that planning is critical when making content in a real-time environment. Every single asset has to be made correctly and in a way that can be run in real-time. Shortcuts will only harm you in the long run so take the pre-production time to really plan every single detail out. Not having to render any of our frames on a render farm was incredible. Instead, we would just export the finished animation and lighting sequences at over 2.5K which on average would take 4-10 minutes for a shot that would be 10-15 seconds long. That was one of the huge pros. Not waiting hours for frames to be rendered and also incurring such costs as cloud rendering.

Not having to render any of our frames on a render farm was incredible.

The huge pro was feeling like you are literally on a film set and moving lights around, changing where props should live in realtime and applying everything that you can do in the real world in this realtime environment. It really just felt like being a D.O.P in a real world environment. Pump in more atmosphere, change out the lens depending on the framing of the shot, set the focal length at will. That was mind blowing to me. To be able to have that level of interaction within a 3D world felt like a new beginning and a freedom I have never experienced in the more traditional route of 3D animation.

Working in the cloud was also such a game changer. At no point of the project was the team together in one room which I still find a little mind blowing. We were in L.A, New York, Europe and South Africa and by being a fully 100% cloud business, that made the process a lot easier in my eyes. It again had its learning curves but what we managed to do using this infrastructure was pretty remarkable.

In terms of cons it was really just us learning something brand new while in production but that was always going to be the case while making BLUE where it was a first for every single member of the team on every level of production.

How does this tech impact how productions are put together in the future? Is there a way that assets can be utilised for different means?

This really does feel like we have taken a step in a new direction.

We really wanted to make an adventure story that could be told and made in a different way. The old way of thinking about things is that you make one piece of content or IP first - a film, a video game, an event or an experience - and then grow it into many other things later. The new way of thinking about content is that these can all exist simultaneously, developed together as part of nonlinear, chapter-driven storytelling across multiple platforms all at once. To make nonlinear storytelling is the holy grail for me.

How could we develop a story from the beginning that could be watched, played and experienced across multiple platforms and devices? What we have made is purely just the introduction and very first dip of the toe into the ocean. The possibilities of what this can become are really pretty gigantic.

What sort of stories will benefit from this process?

It really might just be a shift in who can make content at a high quality and with so many possibilities in a real-time and interactive application. Traditional animation has been, and still is, expensive if you want to follow in the footsteps of big animation studios. 3D and 2D software licenses are a large cost, especially if you wish to scale as well as huge costs for cloud-based rendering.

Software, such as Unreal and Blender, gives people the opportunity to make their ideas and vision without taking on some of these high costs which is ultimately an amazing thing in my eyes. If someone has a great idea, why should software be a barrier to be able to make that come to life?

It’s all about putting tools into the hands of people to make new and great things.

There will always be storage costs, as Unreal projects can grow large in data size, but I love that anyone with a fairly decent desktop computer or laptop can download and launch and get into it to help realise a creator's vision.

To me, it’s all about putting tools into the hands of people to make new and great things.

What's up next for you guys?

We have been working on some exciting developments in production and have connected the main character Jules to a motion capture suit as well as setting up a virtual camera rig that works in real time using any mobile device. It’s really exciting to be able to see the character move and perform in real time and gives us the potential to explore and develop new and exciting possibilities for content creation.

The dream would be to use motion capture as a base of motion and then apply specific animation styles to the data which will filter the motion and essentially apply our animation style. By creating a library of hand-animated sequences of our characters such as walk cycles, arm movements and specific character signature body language, we could start using machine learning and A.I. to essentially learn how Jules moves and interacts with the world.

This is a little ways away from being a reality but I believe it is going to happen fairly soon. The story is the driving force for all of this technology.

We want Jules to boldly go where no one has gone before!

)