Is this the first ad generated entirely in AI?

Using GPT4, Midjourney, Runway Gen2, Eleven Labs, and SOUNDRAW, AI enthusiast PizzaLater managed to create a horrifying fake commercial for a pizza restaurant - Pepperoni Hug Spot - generated only from a few prompts. Surreal experiment or terrifying look at what's to come? Jamie Madge sat down with the creator to find out.

On Monday 24th April, a Redditor named PizzaLater dropped a surreal fake commercial onto the online forum, citing it as a "wasted 3 hours of [his] life".

Three days later, the video is on news reports, the talk of Twitter and has filmmakers and advertisers both amused and quaking at its creation; has the world just seen the first entirely AI-generated ad?

Utilising GPT4, Midjourney, Runway Gen2, Eleven Labs, and SOUNDRAW AI Music, plus a splash of After Effects to piece it all together, PizzaLater's entertaining experiment is both a funny novelty and an ominous portent of what's to come. We sat down with them to see how the pie was cooked up.

Credits

powered by

How long have you been playing around with generative AI tools? What sparked your curiosity?

I gained access to Midjourney in June last year and was immediately hooked. My mind was blown that a simple text prompt could result in a unique image being created almost instantly.

As of today, I've generated just over 30,000 images with Midjourney, and I don't plan on stopping anytime soon. It has been a fantastic outlet for creative exploration and even helped me conceptualize a few things for my day job.

As of today, I've generated just over 30,000 images with Midjourney.

More recently, I gained access to ChatGPT and then GPT-4, which blew my mind once again. Now, with access to Runway Gen2, it feels like things are moving faster than I can keep up.

When did you come up with the idea of creating a full commercial from AI? What led you there?

After gaining access to Runway, I started playing around with some silly prompts and quickly realized that most of the generated content wasn't necessarily suitable for any serious applications.

After generating a few videos of people (attempting to) eat pizza, I felt inspired to create something larger using all the AI tools at my disposal.

Working in motion graphics and editing, this was right up my alley.

I wanted to see how quickly I could generate a script, create visuals/audio, and piece it all together!

Above: A handful of PizzaLater's incredible Midjourney creations.

What was the initial prompt for the script? How much did you play with prompts before you hit on the right note?

I asked GPT-4 to "write me a silly script for a pizza restaurant commercial using broken English." It generated three scripts in total, and I picked my favourite parts to assemble the final script used in the video.

Many of the comments on Reddit suggested that the voiceover script must have been touched up by a human, but I can assure you it is 100% AI-created.

Many of the comments on Reddit suggested that the voiceover script must have been touched up by a human, but I can assure you it is 100% AI-created.

After assembling the script, I asked for 10-15 meme-worthy names for a pizza restaurant, and "Pepperoni Hug Spot" was my favourite by far.

Being familiar with Midjourney, I had no trouble generating a few images like the restaurant's exterior and some pizza backgrounds.

The Runway Gen2 videos were created simply by requesting "a happy man/woman/family eating a slice of pizza in a restaurant, tv commercial."

The project uses GPT4, Midjourney, Runway Gen2, Eleven Labs, and SOUNDRAW AI Music to create the final piece. Could you give us a layman's rundown on what was fed into each stage and how each led into the next?

I was initially inspired by the video clips generated by Runway Gen2, so that's where I started.

I then used GPT-4 to develop the script and restaurant name.

Next, I plugged the script into Eleven Labs' "Voicelab" and had it produce 3-4 different voiceover reads of the script, allowing me to piece together the best takes.

After that, I made a quick visit to SOUNDRAW to select some appropriate background music.

Above: Stills from Pepperoni Hug Spot.

At what stage did you realise you might have created something bordering on horror? How much back and forth was there and how long did the project take in total?

I knew pretty quickly that the end result would be funny and somewhat grotesque. I just didn't anticipate the internet appreciating it so much.

As someone old enough to remember YouTube's birth, I had my editing beginnings there years ago with a few poorly-made spoof commercials. This project was a natural progression.

I'd estimate that I spent just over three hours from start to finish.

I'd estimate that I spent just over three hours from start to finish. Once I acquired all the necessary pieces, I jumped into After Effects to quickly edit everything together. I then created some simple transitions and graphic elements and hit render!

The film only hit Reddit three days ago and is already proving something of a viral smash. Were you anticipating such a response?

I first shared the video with friends who are also exploring new AI technology, and from there, I posted it on Reddit. I had no idea it would blow up like it has!

The internet is a strange place indeed.

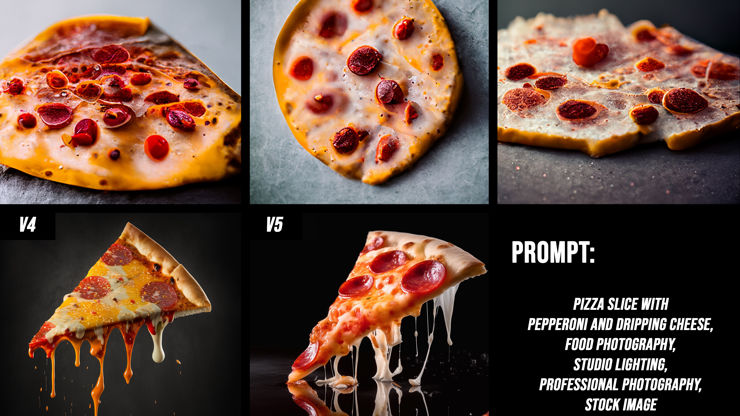

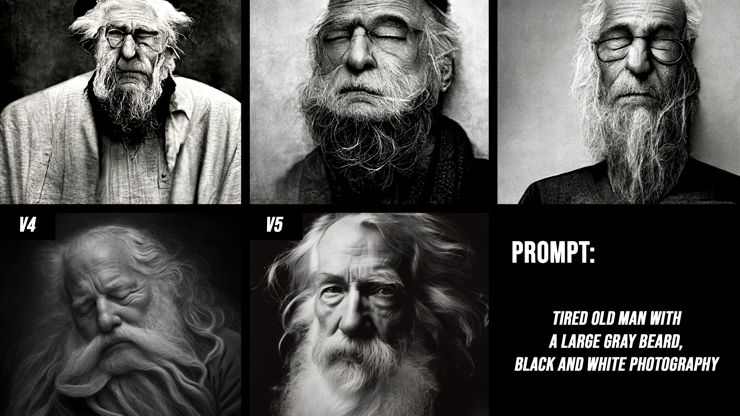

Above: Some comparison images created by PizzaLater, detailing the progression of the same prompt put into Midjourney versions 1-5.

The creation of work like this, despite it having such a surreal aspect, is something of a wake-up call to the ad/production industry. Where do you see generative AI going, in regard to content creation?

As a video content producer, I'm both excited and concerned about where this technology will lead us. For now, I'm trying to use it (semi-)responsibly and familiarize myself with it as much as possible.

I believe things will really go off the rails when text-to-video becomes closer to photorealism. Imagine a world where an individual or small group of savvy folks can pick up production on a fan-favourite Netflix show that doesn't get renewed or even revive an old cult hit that never really had its time in the spotlight.

I believe things will really go off the rails when text-to-video becomes closer to photorealism.

I think all of this is possible and will happen much sooner than we think.

I'll leave the discussion regarding deepfakes and the potential misuse of these tools for rapidly creating misinformation to more knowledgeable minds.

What's your next AI project?

I may or may not be currently working on a new commercial for a soup-only buffet-style restaurant called 'The Slurp Shack'.

GPT-4 made me sign an NDA, so I can't really talk about it.

You can find PizzaLater on Instagram, YouTube, Twitter and their own site pepperonihugspot.pizza.

)